EMC3 Projects

ZFS Improvements

ZFS is an open-source file system and volume manager that offers high data integrity and a rich feature set all though software defined storage pools. LANL uses ZFS in multiple storage tiers from Lustre scratch storage systems to the colder campaign storage tier. With LANL’s migration from rotating media disks to low latency, high bandwidth NVMe flash devices, it is imperative that ZFS be able to leverage these new devices to capture all the available performance they provide. When LANL first started evaluating ZFS with NVMe SSDs, a significant portion of the available device bandwidth was missing in the range of forty five percent for writes and seventy percent for reads in various ZFS storage pool configurations. This disparity between device capabilities and ZFS performance mainly had to do with design elements of the ZFS file system, which were initially built to mask slow rotating hard disk drives. LANL has been working on two projects to improve ZFS performance when using the NVMe devices. First, adding direct-io support to ZFS, and the second is to allow ZFS to harness the capabilities of NVMe computational storage devices.

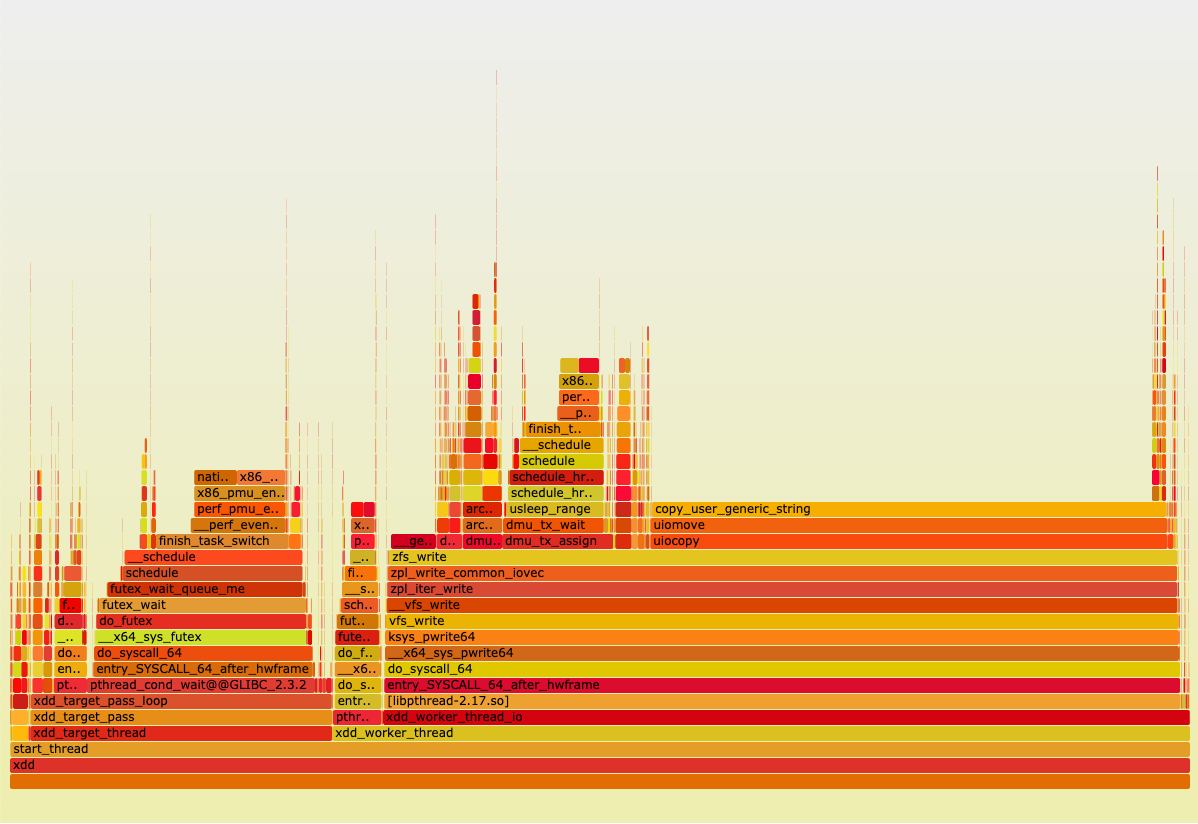

Our direct-io work aims to redesign the read and writes paths in ZFS to bypass the Adjustable Replacement Cache (ARC) thus avoiding the high costs associated with memory copies. The ARC is used to store data that will later be written out in a transactional based approach or to cache data that has recently be read and was designed to mask latencies down to hard disk drives. This approach of doing memory copies instead directly issuing I/O down to devices has been a common approach for file systems for a long time. While this still works for masking limitations with rotating media, NVMe devices do not function in the same way. NVMe devices use NAND flash memory for storage providing low latency and high bandwidth even for random I/O patterns. Many of LANL’s I/O workloads consist of large sequential writes that are rarely read, and for these workloads the copies to the ARC were far more expensive than sending I/O requests directly down to the flash devices in a ZFS storage pool. The addition of direct-io to ZFS allows users to state they want to bypass the ARC completely and read and write directly down to the underlying storage devices. With the addition of direct-io, ninety percent of all available NVMe device bandwidth for LANL I/O workloads can be captured using various ZFS storage pool redundancy configurations.

While the direct-io project has allowed LANL to better leverage NVMe devices with ZFS, there are still shortcoming with performance when using data protection schemes and data transformations. LANL’s ZFS filesystems use checksums, erasure coding, and compression to add data integrity and shrink the overall I/O footprint of datasets. However, these operations are not only computationally expensive, but the combination of these operations result in multiple passes over the data in memory making them memory bandwidth intensive. As an example, using two devices of erasure coding with gzip compression and checksums results in only six percent of all available ZFS performance through the ARC. To remedy this, LANL has added functionality allowing ZFS to offload checksums, erasure coding, and compression to computational storage devices. This functionally was developed in two parts. The first is a layer in ZFS called the ZFS Interface for Accelerators (Z.I.A.), which allows users to specify what operations they would like to be offloaded to computational storage devices and works with ZFS data structures. The second is an open-sourced kernel module, which Z.I.A. leverages, called the DPU-SM. The DPU-SM provides generic hooks for any file system to communicate in a standard way to computational storage devices while allowing for a variety of offload providers to register their implementations in a consumable fashion. Using this new patch set a 16x speedup improvement can be gained with ZFS storage pools using checksums, erasure coding, and gzip compression.

Both the direct-io and Z.I.A. patches are currently open pull requests to OpenZFS master. The addition of both will allow ZFS to fully leverage the capabilities of NVMe device.

Paper at ZFS Interface for Accelerators available at https://www.osti.gov/biblio/1839061

ZFS changes at https://github.com/hpc/zfs